Cloud Security And Azure Private Link

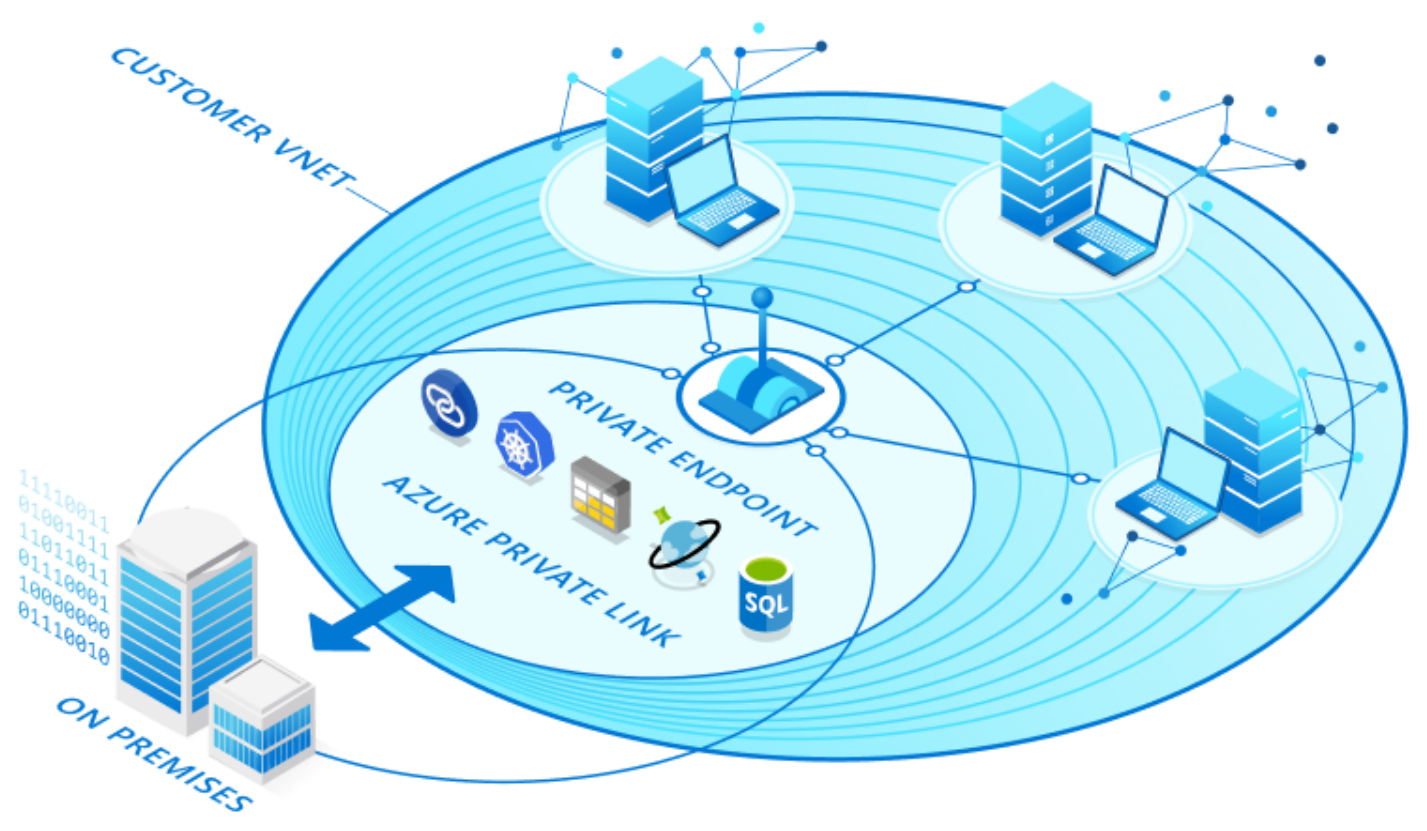

Azure Private Link enables access to hosted customer and partner services over a private endpoint in an Azure virtual network. This means private connectivity over your own RFC1918 address space to any supported PaaS service while limiting the need for additional gateways, NAT appliances, public IP addresses, or ExpressRoute (Microsoft Peering).

Hold on, wasn’t the point of Public Cloud to leverage services offered by third-party providers over the public internet? Why, then, would we want to contain traffic in our private IP space, which is likely routable across our on-premises network? Let’s examine this a little deeper to understand the problem we are aiming to solve, solutions available today, some complexities introduced with Private Link, and why we wouldn’t just use PaaS services as is.

Microsoft announced Private Link (Preview) in 2019 becoming generally available in early 2020. AWS released their flavor of Private Link back in 2017. Google Cloud released Private Service Connect in 2020.

The Problem

Leveraging PaaS services is kind of like owning a home. Except in that home, you don’t have to fix cracks in the foundation, patch that leaky roof, clean the garage, or even mow the lawn. PaaS services significantly reduce infrastructure management and increase agility while simplifying your ability to scale. Security-focused teams will usually identify the following as problems when talking PaaS:

- The risk of data leakage / exfiltration increases as PaaS integration with IaaS and on-premises environments increase

- Decreasing or eliminating internet exposure is preferred; Azure PaaS services were originally available via public IP addresses only

- Anything that runs over a network that isn’t your own private WAN is risky and must be avoided

While I understand and share concerns with risk here, these are generally obstacles I have run into with various security teams throughout my time in tech. I can look back on some tough conversations in the past when trying to advocate for SD-WAN. Since it went over the internet, it couldn’t be reliable and introduced significant risk to the business. Fast-forward to 2021 especially in the current state of the COVID-19 pandemic; most large enterprises now declare internet as the new network, public cloud as the new data center, and identity as the new perimeter.

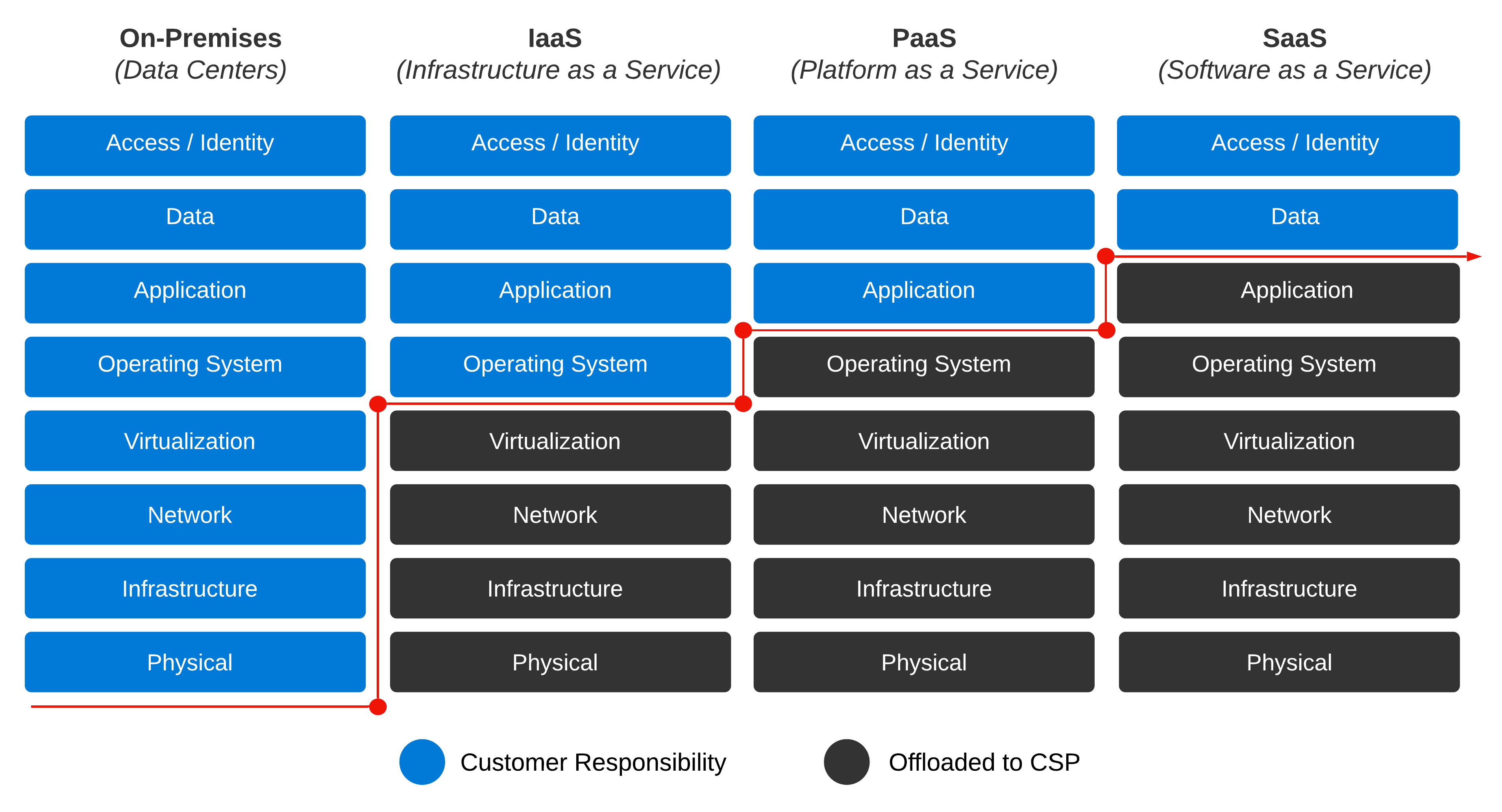

Examining Cloud Security Models

Our scope here is security in the cloud, not security of the cloud. Security considerations differ in the cloud by service model. In descending through each service model’s different layers, the responsibility for security shifts between Customer and Provider.

For example, with SaaS, the layers a customer is responsible for is relatively small; data and access/identity are considered. As you move into PaaS, the application layer, along with some platform configuration, comes into play. Moving into IaaS, the operating system is now customer responsibility, along with all the layers above.

When you have a nice mixture of the three cloud layers and the full gamut of all things data center, thinking through the security domain becomes complicated quickly. Add some Multi-Cloud to this venue, and you are in for a real party.

Risky Patterns

In the Digital Era, privacy is at the top of the list. This is especially true when considering specific regulations like GDPR or HIPAA. A data leak can cause a significant loss in revenue and long-term damage to your brand. Specific patterns can expose the number of porous areas, contributing to possible data leakage if not appropriately hardened.

- Identity & Access Management (IAM) - Access based on identity authentication and authorization controls; The ground floor for establishing Zero Trust in the public cloud

- Cloud Endpoints to On-Premises - Services in public cloud provider’s managed network need to make calls to on-premises private networks

- On-Premises to Cloud Endpoints - Exact opposite of the first pattern; Services in public cloud provider’s managed network need to be called from an on-premises private network

- Internet Egress - Any point of internet exit; A sinister concoction of data center places, properly designed cloud places, shadow IT oops cloud places, and… plenty of other venues just south of anyone’s radar

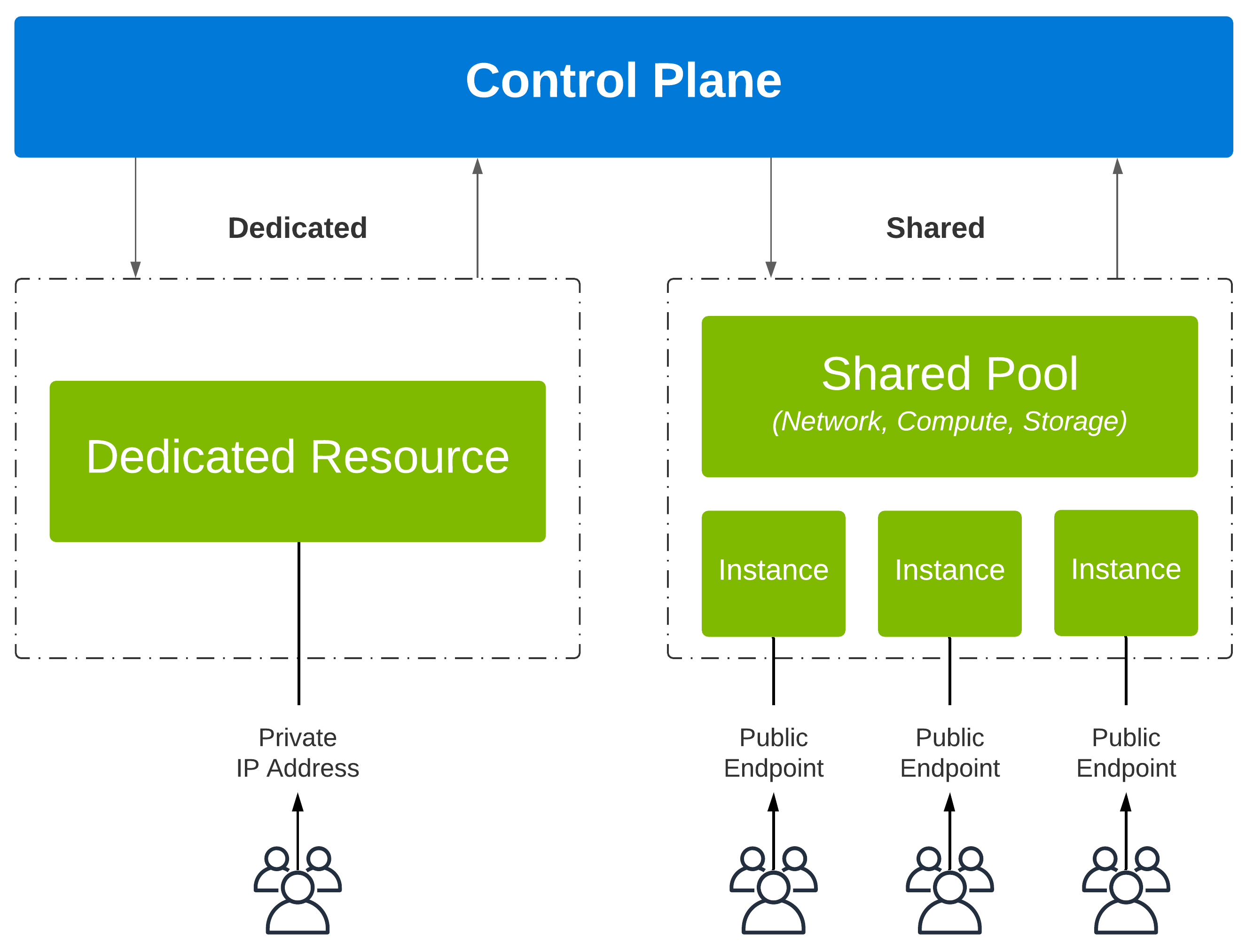

A Word On Provider Architectures

For public cloud to achieve the massive scale-out which accommodates customer growth, cloud services are built on large pools of resources (network, compute, and storage). This large pool of resources is managed by a centralized control plane. As demand increases, additional resources are added to the pool to increase capacity.

Service Behavior

There are ultimately two ways in which services can be categorized based on networking behavior. Since this write-up is Azure focused, I’ll map Azure specific constructs into the examples:

Public Services is typically what we think of when referencing PaaS. Public Services are consumed over the internet, reachable via public IP addresses, and are not provisioned in the customer’s VNet. An example of a public service would be Azure Storage.

Private Services by default, are not reachable over the internet. Services are assigned RFC1918 addresses and are deployed directly inside a customer’s virtual network. An example of a private service would be an Azure Virtual Machine.

Resource Allocation

When customers provision a service, the control plane will create an instance of the service. Resources are then allocated accordingly. There are generally two ways in which a service can allocate resources to an instance:

Shared Services - Resources are allocated to more than one service instance; Each instance consumes resources simultaneously.

Dedicated Services - Resources are allocated to a single instance; These resources remain dedicated for the instance’s entire lifecycle.

Integration

Suppose you are going through the cloud migration journey. In that case, you may have some strategy that includes a combination of rehost, replatform, and refactor. At some point, you will probably be faced with integrating cloud PaaS services with IaaS and even back on-premises.

Before Service Endpoints

Azure SQL DB is a great way to migrate your SQL Server databases without changing your apps, making it extremely popular. Before Service Endpoints came along, how would a virtual machine communicate to Azure SQL?

- Azure SQL DB is available via public IP address and listens on port 1433

- The virtual machine has direct connectivity to the internet via NAT’ed IP address

- The virtual machine leverages this for internet egress to reach Azure SQL service

- Azure SQL service sees the NAT’ed IP address, not the private IP of the virtual machine

- No restrictions can be enforced to limit service ingress to your private IP addresses only

Why Is This Risky?

To filter traffic in this scenario, the virtual machine would need a public IP address. This presents the following complications:

- Virtual Machine now allows ingress directly from the internet (This is never a good practice)

- The subnet that hosts the virtual machine would require a NAT gateway; More configuration with potential performance implications

- Azure SQL would need to be open to clients on any network; In the event credentials are leaked, anyone on the internet could gain access

Tradeoffs

Aside from being inflexible in controlling ingress and egress traffic, the route is not optimal. Traffic is technically going out to the internet, but this is probably, in reality, routing through Azure’s external backbone and coming back in. The service is reachable via public IP address only. Considering the design and various integrations required to make it work, there could be significant opportunities for data leakage or exfiltration.

VNet Integration

VNet Integration is leveraged for services whose design matches the dedicated services architecture. Also called VNet Injection, this method deploys a given service directly in your VNet. As a solution, it enables better continuity for workloads operating in tiered hybrid design.

Problems Solved By VNet Integration

- Injected services are exposed over RFC1918 addresses directly from your VNet

- As a dedicated platform, none of the components are shared across other customers; This could be a requirement in some cases

- At the network layer, the behavior would be similar to that of a virtual machine in the same VNet

- Communication to virtual machines in same VNet and in peered VNets is bidirectional

- Communication between on-premises environments over ExpressRoute - Private Peering or VPNs is bidirectional

- Inbound connections from routable IP addresses outside of VNet address space is possible when exposed behind public IP address; Best to use an external load balancer / front-end IP address

- VNet Source NAT can be used to initiate connections to internet routable IP addresses

VNet Integration Tradeoffs

Although VNet Integration was created to deploy App Services privately, is all of it really private and exclusive to only your VNet? While the service itself is dedicated, the control plane managing the services is not. Integrated services require inbound and outbound connections to platform managed public IP addresses, which facilitate interaction to the control plane. Whether this is a tradeoff is debatable.

- Integrated services are deployed into an isolated subnet; Services contain sizing guidlines

- Each service identifies the required dependencies for control plane communication; For example, App Service Environment dependencies can be found here

- Increased complexity in managing NSGs and UDRs; Service Tags should be used to reduce overhead as the mapping between tag and addresses are automatically managed by the platform

- Ultimately, each service will exhibit different behavior and likely has different requirements; Always consult the official documentation

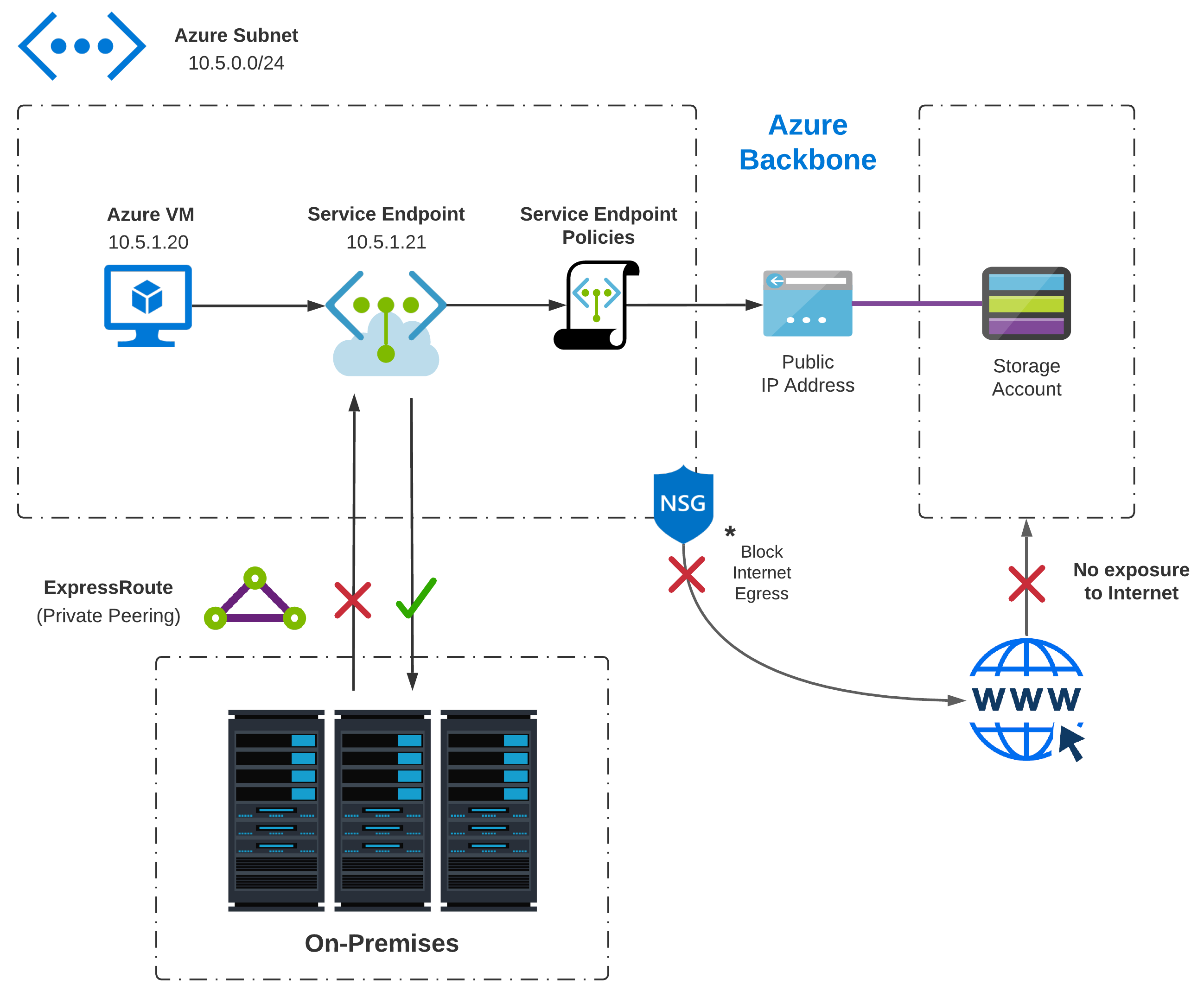

Service Endpoints

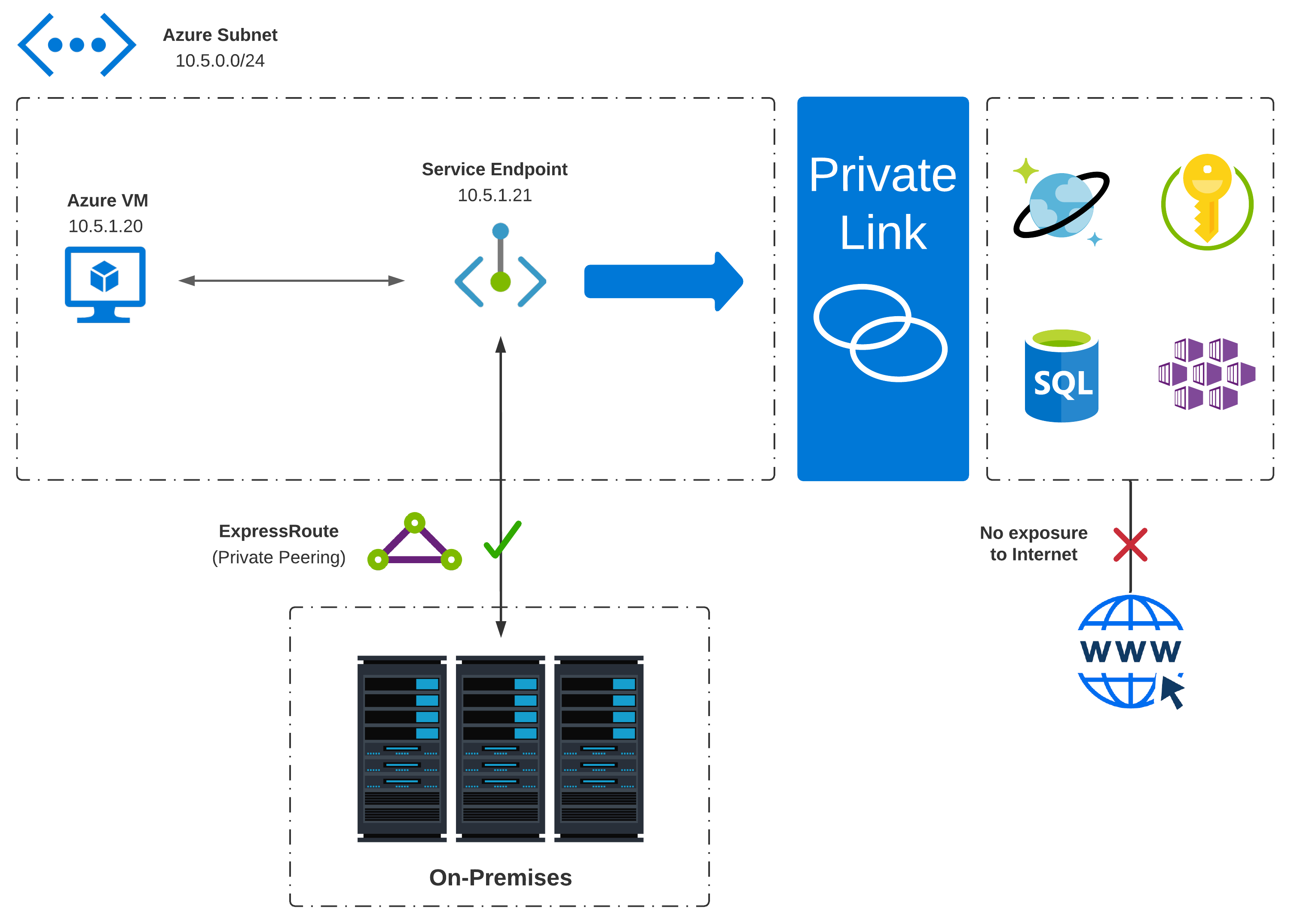

Service Endpoints as a solution can be applied to select PaaS services with a shared architecture. A route is created from your PaaS service into the desired VNets.

Problems Solved By Service Endpoints

- After enabling a service endpoint in the subnet where the Application Gateway is deployed, source IP addresses switch from public to private IP addresses

- Traffic from a given virtual machine in a private subnet can now talk directly to Azure SQL without requiring internet egress; Without a public IP address, bad actors can’t scan a virtual machine’s open ports for vulnerabilities, thus limiting application downtime and data theft

- Service Endpoints ensure traffic egressing a given subnet is tagged with the subnet ID; Traffic inbound to Azure SQL can then be identified and blocked except for your desired subnet IDs (like the subnet which hosts the service endpoint)

- An NSG can then restrict all egress traffic from the subnet to the internet, thus locking down communication both ways

- Connecting to Azure Services from your VNet happens with an optimized route over Azure’s internal backbone network; This reduces network hops, which increases performance (In most cases)

Setting Up A Service Endpoint

When provisioning a compatible service like Azure Keyvault, you will have an option to use Public Endpoint (Selected Networks) during the setup process. It will then walk you through enabling service endpoints on your desired virtual network and subnet.

DNS Behavior With Service Endpoints

DNS behavior remains as-is when leveraging service endpoints. DNS of a given resource will always resolve the resource’s public IP address regardless of where the traffic originates. This is because the service endpoint doesn’t change the network interface IP address of the resource it was added to. In doing a DNS lookup from an Azure virtual machine, we get a CNAME to cloudapp.net which gives us 20.185.217.251. This lookup is identical when being executed both from the virtual machine living in the VNet and on my local machine at home.

Service Endpoint Tradeoffs

Leveraging service endpoints is an excellent way to harden your VNet and service communication; As with everything in technology, it has tradeoffs to consider.

- Connection initiation with service endpoints is unidirectional; The initiator must be inside the subnet that holds the integration

- From a VNet’s vantage point, service endpoints provide access to the entire PaaS service; You cannot target specific instances of a PaaS service

- Once enabled on a subnet, clients in the subnet have network level access to all instances of that particular service (including those belonging to other users); To mitigate the risk of leakage or data exfiltration, Service Endpoint Policies should be used

Service Endpoint Policies enable VNet owners to control precisely which instances of a service type can be accessed from their VNet

- The source is now a private IP address, but the destination is still the service resource’s public IP address; Traffic is still leaving your virtual network (Whether this is a tradeoff is debatable)

- Service Endpoints override any BGP or UDR routes for the address prefix match of any Azure service; While this isn’t necessarily a problem, it introduces another traffic pattern that must be accounted for

When a service endpoint is created, routes for all the public prefixes used by the service type get added to the subnet’s route table. The next-hop is set to a value of VirtualNetworkServiceEndpoint. All packets that match that next hop are encapsulated in outer packets, which carry information about the identity of their source virtual network.

- Service Endpoints do not work across Azure AD Tenants; This is not generally a problem in smaller environments but may require a workaround in larger or non-standard environments

- Endpoints can’t be used for traffic originating from on-premises networks

When service endpoints launched, the recommended solution for connecting from on-premises was to set up Microsoft Peering, which comes with additional complexity and considerations. This would involve identifying the Azure service resource side’s public IP addresses and allowing via resource IP firewall and the ExpressRoute / On-Premises firewall. Why not just use internet at this point?

Private Link

To address additional customer demand surrounding integration and security, Private Link was born. It is the latest solution to integrate services with a shared architecture. It addresses limitations of service endpoints by exposing PaaS services via private IP addresses taken from a VNets RFC1918 space.

Breaking Down The Components

- Virtual Networks (VNets) are created in a subscription with private address space and provide network-level containment of resources with no traffic allowed by default between any two virtual networks. Any communication between virtual networks needs to be explicitly provisioned.

- Private Endpoint establishes a logical relationship between a public service instance and a NIC attached to the VNet where the service is exposed.

- Private Link Service enables you to access Azure PaaS Services over a private endpoint. Services supported today can be found here.

Problems Solved By Private Link

- Using a private endpoint enables flexible PaaS integration with your own private address space

- With no public IP addresses exposed, this means network-based access restrictions are not so complicated or not required at all; This simplifies network design as a whole

- Private Link enables on-premises clients to use existing ExpressRoute Private Peering or an existing VPN to consume PaaS services via private IP addresses

- A private endpoint is mapped to an instance of a PaaS resource instead of the full service meaning consumers can only connect to that specific resource; This provides additional built-in protection against data leakage

- Connectivity from a designated VNet to a partner (inside or outside) organization’s VNet is now possible; This creates new opportunities for B2B (Business-to-Business) which can leverage Azure native connections without the necessity for public endpoints

Setting Up A Private Endpoint

Using the Azure Keyvault example again, you will have an option to use Private Endpoint during the setup process. It will then walk you through setting up all the necessary configuration for a private endpoint and optionally creating a Private DNS Zone. Following the defaults, it will also create the A Record for you.

DNS Behavior With Private Endpoints

One of the more significant differences between service endpoints and private endpoints is with DNS. In doing a DNS lookup from an Azure virtual machine, we directly resolve the private IP address 10.5.1.4. If we did this same lookup from a machine outside of our VNet or Azure, then we would get a CNAME to cloudapp.net which would then give us the public IP address of the service.

Private Link Tradeoffs

- Connection initiation with Private Link is unidirectional; The initiator can be multiple VNets or on-premises networks

- As of today, NSGs are not supported on private endpoints; While subnets containing the private endpoint can have an NSG associated, the rules will not be effective on traffic being processed

- DNS integration is a major part of Private Link and brings additional complexity; Mapping design and configuration to outcomes requires significant planning

- Transitioning infrastructure from service endpoints to private endpoints will require additional planning and downtime

Expanding On DNS Complexity

In the most simple of scenarios, an instance of a service that supports Private Link can be accessed concurrently through the service’s public IP address and private endpoint (across multiple VNets). During provisioning, each instance of a public service is assigned a unique and publicly resolvable FQDN. With a service operating in shared architecture, multiple instances run on the same set of resources sharing the same IP address. To handle resolution, CNAME records map the FQDNs of each instance back to the same address.

Private Link, by design, requires DNS behavior to change based on origin - e.g., inside/outside and also based on the existence of a private endpoint for a given instance. Since, by default, the FQDN of the service resolves to a public IP, you would need to configure DNS accordingly to map to the private IP address allocated from your VNet. This is a topic that should be planned out prior to leveraging this service. One consistent truth I have learned working in technology is, Some of the most bizarre problems leading to colossal time waste turn out to be misconfigured DNS. A great way to get ahead of these challenges is to educate on how name resolution for resources in Azure works and also know your options for integrating with on-premises DNS.

Conclusion

Service Endpoints as a solution is easier to set up and manage, although integration with on-premises can be a challenge. Private Link is much more complex and will differ across environments. Deploying Private Link will require much more up-front discovery and planning around existing design, which also segues into infrastructure as code and possibly incorporating automation outside of Azure - (If leveraging existing DNS solution).

Benjamin Franklin once said, “By failing to prepare, you are preparing to fail.” By taking that extra preparation, Private Link can offer much more granular control with how PaaS services are integrated into an environment. In the state of Cybersecurity post-pandemic, I predict that all the major cloud providers will prioritize the privatization of all PaaS endpoints.

Acknowledgements

Big thanks to the wise and insightful Steven Hawkins for taking the time to peer review this post.