Getting Started With Alkira And Terraform (Part 2)

In Part 1, we started with a scalable foundation that can adapt over time as the business grows and adjusts to changing markets. With Alkira’s Network Cloud, we take a cloud native approach in enabling our customer’s transformation. No appliances need to be provisioned in remote VPCs or VNets, and no agents need to be installed on workloads. Getting started is as easy as kicking off a build pipeline. For Part 2, let’s connect some networks from AWS, Azure, and GCP.

Scenario

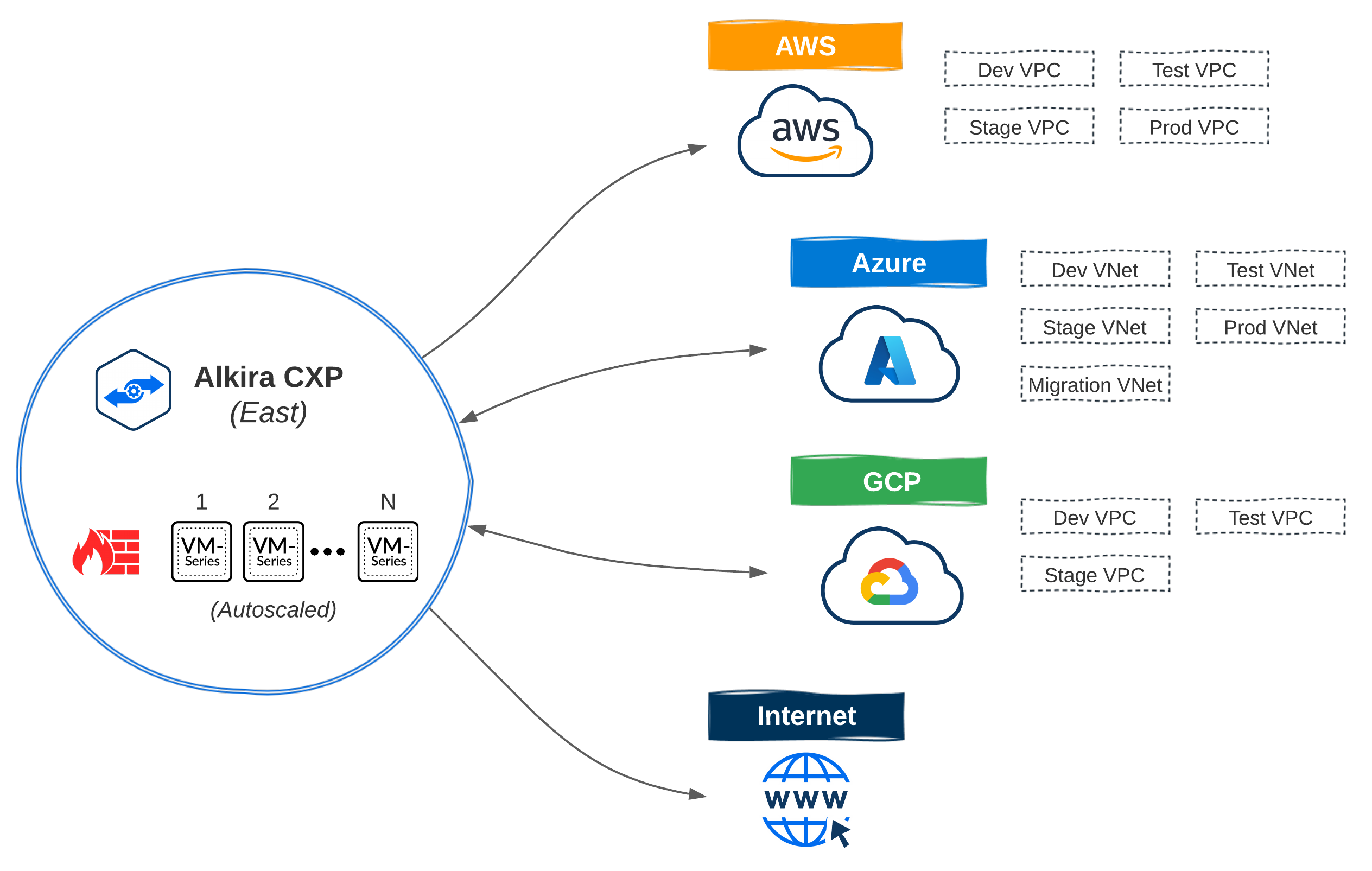

In Part 1, we set up a hypothetical Line of Business called LoB - Digital, which has the following network requirements:

- Cloud Native applications will be deployed in AWS; Application lifecycle requires DEV, TEST, STAGE, and PROD VPCs

- Azure gets the same network types as AWS for cloud native workloads; In addition, Azure will also get a MIGRATION VNet which will act as a landing zone for workloads being migrated from on-premises

- A new product surrounding data analytics is being established, and the product team wants to leverage GCP; The product is not production-ready, so only DEV, TEST, and STAGE VPCs are required

Since no appliances get installed inside cloud networks, how does Alkira interface with the cloud providers? Alkira takes the cloud native approach of using existing authentication methods in each cloud. For instance, in AWS, this would be IAM Policies and with Azure, Service Principals. Most enterprises are already interacting with the cloud this way today, so integrating with their existing automation + pipeline strategy is seamless.

Resources

We will be using the following Terraform Resources in this post:

| Name | Type | Description |

|---|---|---|

| alkira_credential | data source | Reference existing credential |

| alkira_billing_tag | data source | Reference existing billing tag |

| alkira_connector_aws_vpc | resource | Provision connector for AWS VPC |

| alkira_connector_azure_vnet | resource | Provision connector for Azure VNet |

| alkira_connector_gcp_vpc | resource | Provision connector for GCP VPC |

Connecting The Cloud

Alkira’s Terraform Provider does quick work of connecting cloud networks to our foundation. The following snippet will connect an Azure VNet, place it in the group we provide, and attach a billing tag.

azure_connector.tf

Organizing Things

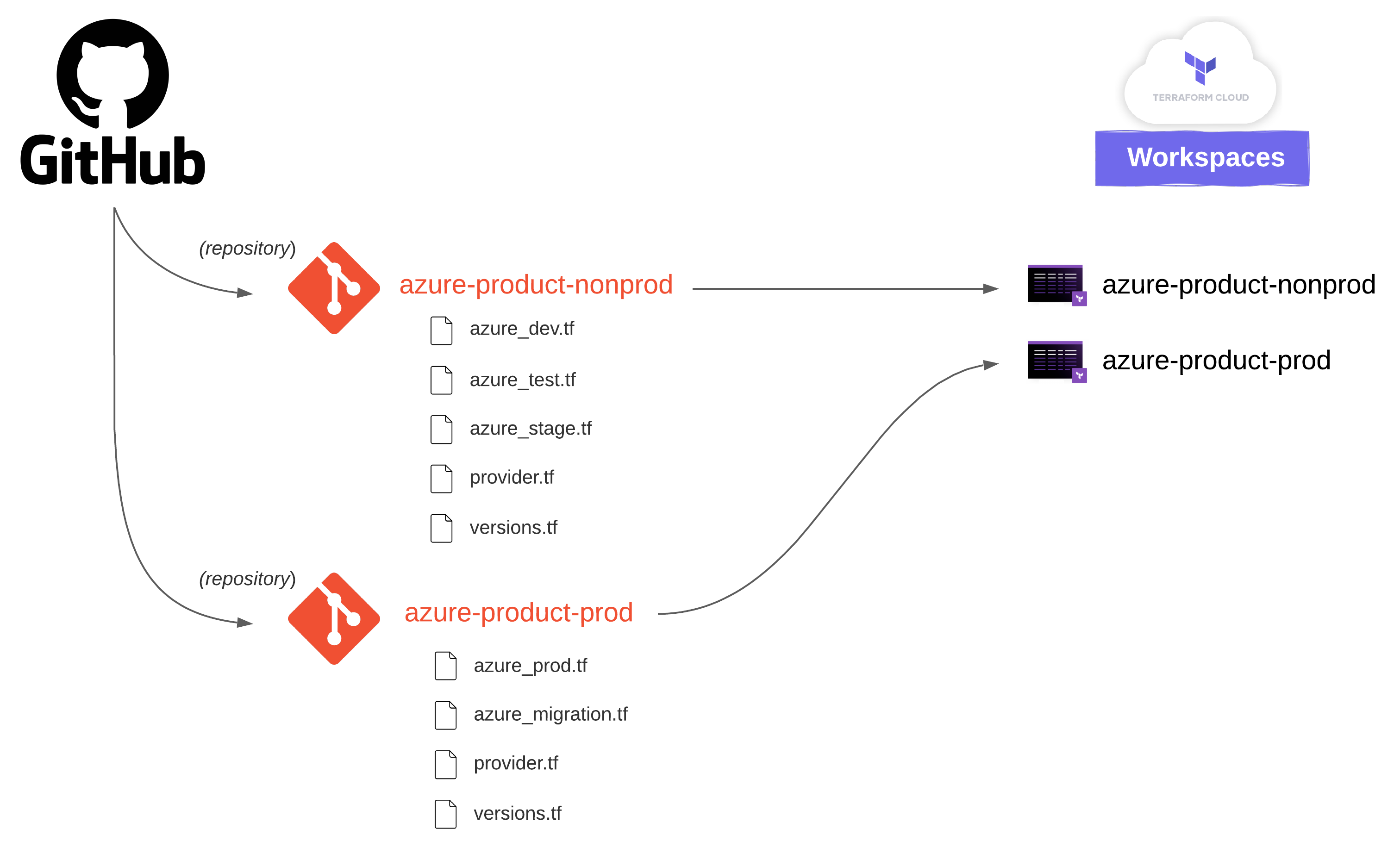

HashiCorp recommends One Workspace Per Environment Per Terraform Configuration. Since we are provisioning and connecting so many networks + environments across all three cloud providers, I simplified a few things. With Azure, for example, repositories map to Workspaces like this:

Provisioning

Like Part 1, we will use Terraform Cloud for provisioning. A successful merge to our main branch will automatically trigger a plan, and apply.

Validation

Twelve VPCs/VNets across three public clouds couldn’t be easier! By default, networks connected to our corporate segment have full-mesh connectivity to each other. Later in this series, we will build automated policies to work with our groups that produce some logical micro-segmentation.

Conclusion

For Part 1, we built a scalable foundation, and in this post, we connected networks from AWS, Azure, and GCP to it. One area where enterprises struggle is securely connecting their data center or remote offices to the cloud. This use-case often maps back to migrating workloads to the cloud or running Tiered Hybrid Workloads. In Part 3, we will connect a few on-premises networks into the mix to see how Alkira can help solve this problem.